RoSA: Towards Intuitive Multi-Modal and Multi-Device Human–Robot Interaction

Building on a prior Wizard-of-Oz study, this paper presents a fully autonomous RoSA system using speech, face, and gesture input for seamless human–robot collaboration.

From Wizard to Reality: A Fully Autonomous RoSA System for Natural Human–Robot Interaction

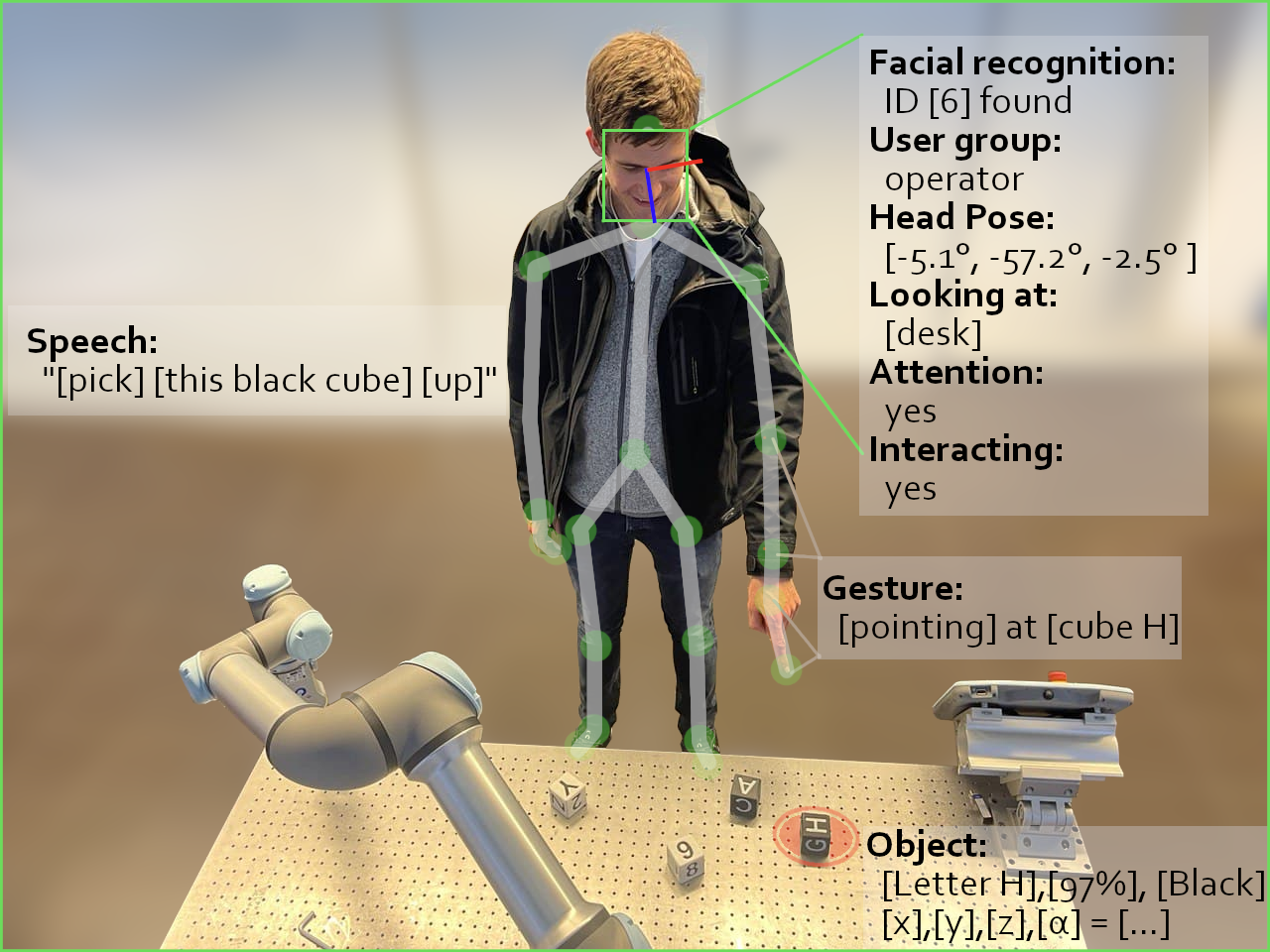

This paper presents RoSA (Robot System Assistant) as a fully operational multi-modal HRI platform, transforming insights from an earlier Wizard-of-Oz (WoZ) study into an autonomous system using speech, gesture, gaze, and face recognition.

The system was designed to enable intuitive robot control in industrial settings without requiring users to have prior technical knowledge. RoSA was evaluated through a pilot study with 11 participants in a cube-stacking and spelling task involving a UR5e cobot, simulating collaborative assembly.

Key highlights:

- RoSA is built on ROS middleware, supporting multi-user and multi-device interaction with distributed workstations.

- Users can give commands using natural speech and pointing gestures, combining both modalities for increased expressiveness.

- A modular design includes components for face detection, attention estimation, cube tracking, and robotic control, with each module open-source and reusable.

- Despite being fully autonomous, RoSA achieved comparable usability scores to the earlier WoZ prototype, with average UX ratings over 64% (SUS, UMUX, PSSUQ, ASQ).

- Detailed analysis revealed that user pointing precision improved significantly when shoulder-tip joints were used over elbow-wrist pairs.

- The system dynamically adapts to user attention and identity, creating personalized, contactless, and safe HRI.

Evaluation:

- Participants completed complex stacking and spelling tasks in an average of 25 minutes.

- The system was rated positively in module-specific feedback, especially for attention tracking and robot control.

- Challenges remain in wake-word detection and speech understanding, indicating areas for future refinement.

This work represents a crucial step from simulation to reality, grounding the RoSA interaction concept in a robust and extensible robotic framework ready for real-world HRI applications.

Fulltext Access

https://doi.org/10.3390/s22030923

Citing

@article{RoSA2,

title={Robot System Assistant (RoSA): Towards Intuitive Multi-Modal and Multi-Device Human-Robot Interaction},

author={Strazdas, Dominykas and Hintz, Jan and Khalifa, Aly and Abdelrahman, Ahmed A and Hempel, Thorsten and Al-Hamadi, Ayoub},

journal={Sensors},

volume={22},

number={3},

pages={923},

year={2022},

publisher={MDPI},

doi={10.3390/s22030923}

}