Multimodal Engagement Prediction in Multiperson Human–Robot Interaction

A real-time multimodal system for predicting engagement and disengagement in collaborative industrial HRI, using deep learning and rule-based approaches.

Real-Time Engagement Estimation for Human–Robot Collaboration

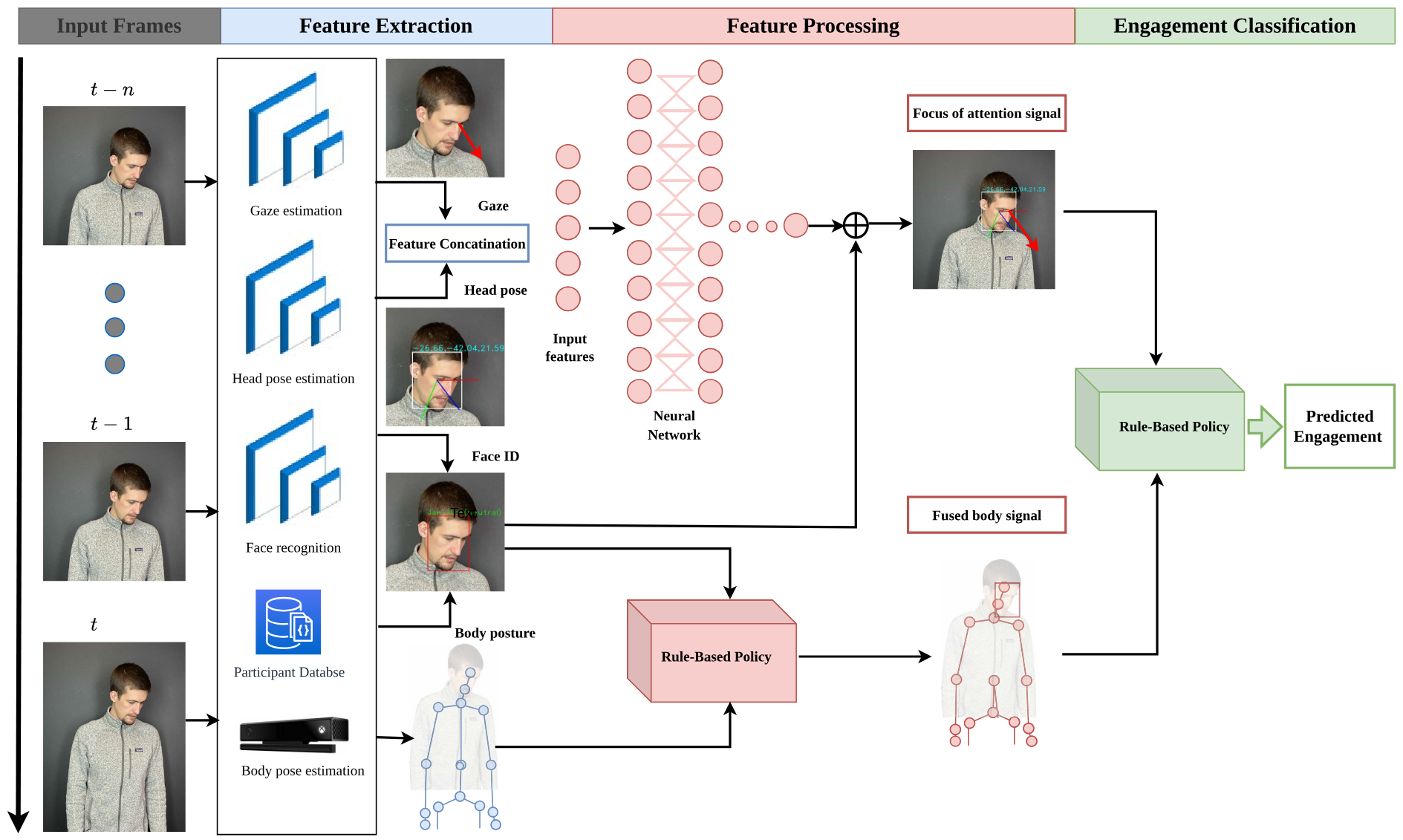

This study presents a multimodal engagement prediction model for human–robot interaction in industrial environments. Designed for multi-person, open-world settings, the system uses a combination of deep learning and rule-based policies to predict when humans intend to interact with a collaborative robot (cobot) and when they disengage.

Key features:

- Three-stage architecture:

- Deep learning for feature extraction (gaze, head pose, body posture, face ID)

- A neural network to estimate the focus of attention

- Rule-based classification to decide on engagement/disengagement

-

Person identification via face recognition allows tracking and re-engagement across multiple users.

-

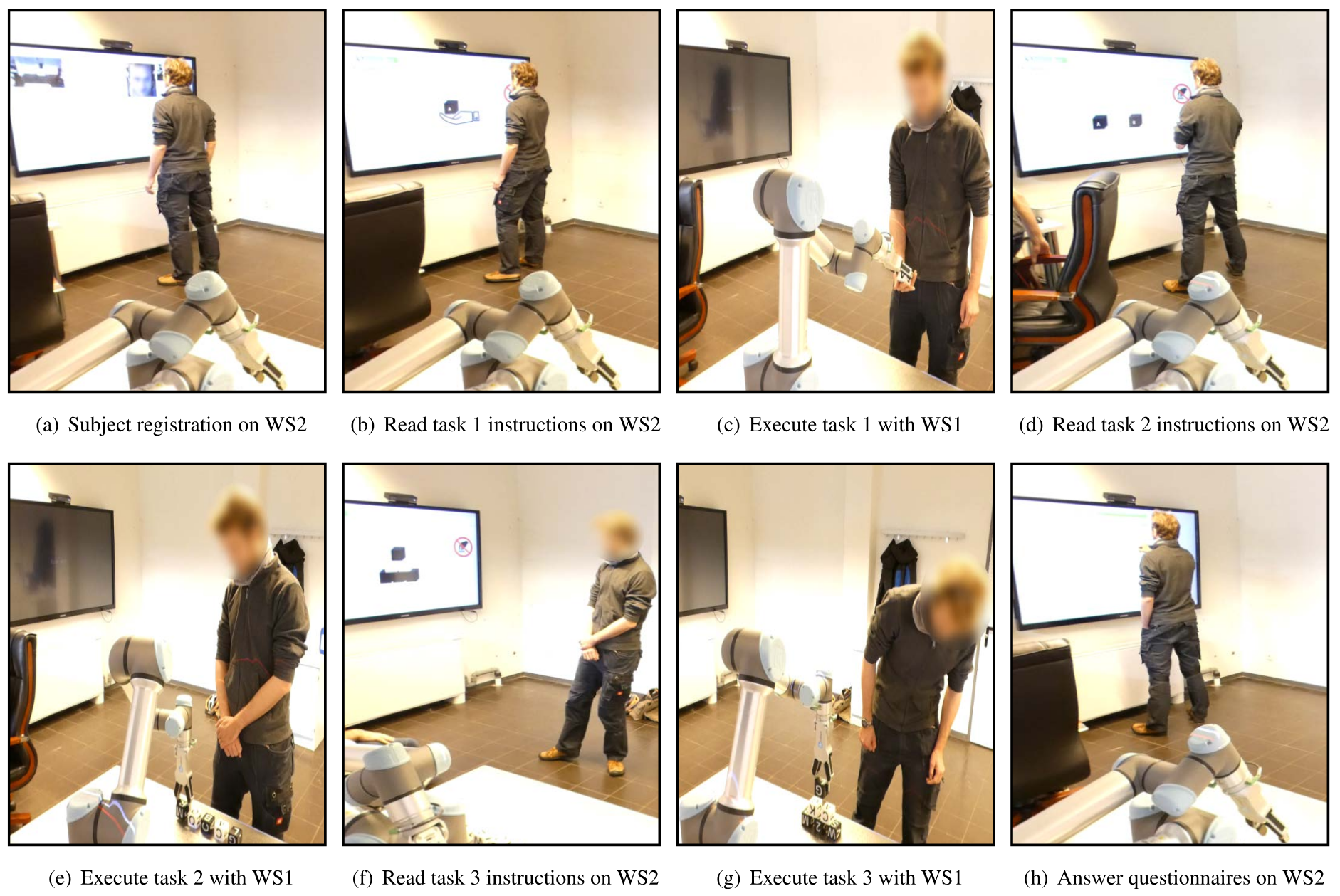

Validated through an study with 11 participants, performing collaborative tasks with a UR5e cobot.

- Achieved performance:

- Precision: 96%

- Recall: 90%

- F-score: 93%

-

Participants rated the engagement module with a satisfaction score of 5.65 (on a 7-point scale).

- Real-time capable, with a total frame processing time of approximately 40 ms.

This work supports safe, contactless, multimodal human–robot collaboration suitable for multi-user industrial applications.

Fulltext Access

https://doi.org/10.1109/ACCESS.2022.3182469

Citing

```bibtex @ARTICLE{EngagementHRI2022, author={Abdelrahman, Ahmed A. and Strazdas, Dominykas and Khalifa, Aly and Hintz, Jan and Hempel, Thorsten and Al-Hamadi, Ayoub}, journal={IEEE Access}, title={Multimodal Engagement Prediction in Multiperson Human–Robot Interaction}, year={2022}, volume={10}, pages={61979–61991}, doi={10.1109/ACCESS.2022.3182469} }