ERDF research project

ENABLING

Resilient Human-Robot Collaboration in Mixed-Skill Environments

ENABLING

ENABLING (Resilient Human-Robot Collaboration in Mixed-Skill Environments) addresses the problem area of developing AI methods to complement the skills of robots and humans. It thus enables research innovations in cross-sectional areas of IT and key enabling technologies and forms the basis for future applications in the lead markets. The challenges lie, firstly, at the interface between robotics and AI and, secondly, in the complexity of tasks in a mixed-skill environment and, thirdly, in resilient and responsible collaboration. These are to be achieved by developing the key technologies for 1. robust recording of the affective user state, 2. semantic environment analysis, 3. intention-based interpretation of user actions, 4. and research into generative models for recording complex behavior in mixed-skill environments.

The project is funded by the European Regional Development Fund (ERDF) under grant No. ZS/2023/12/182056 and is planned with a project duration of 4 years (2024 to 2027).

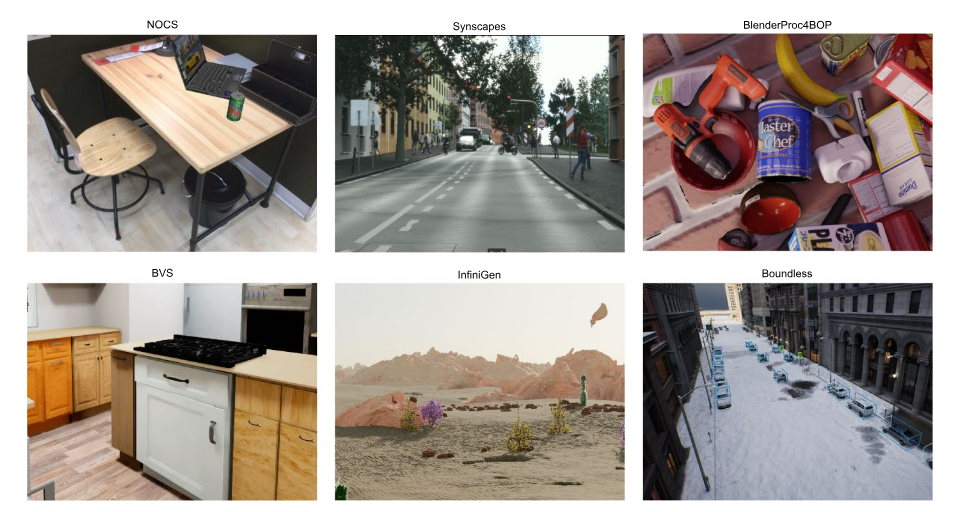

Automating Synthetic Dataset Generation for Image-based 3D Detection: A Literature Review

October 19, 2025

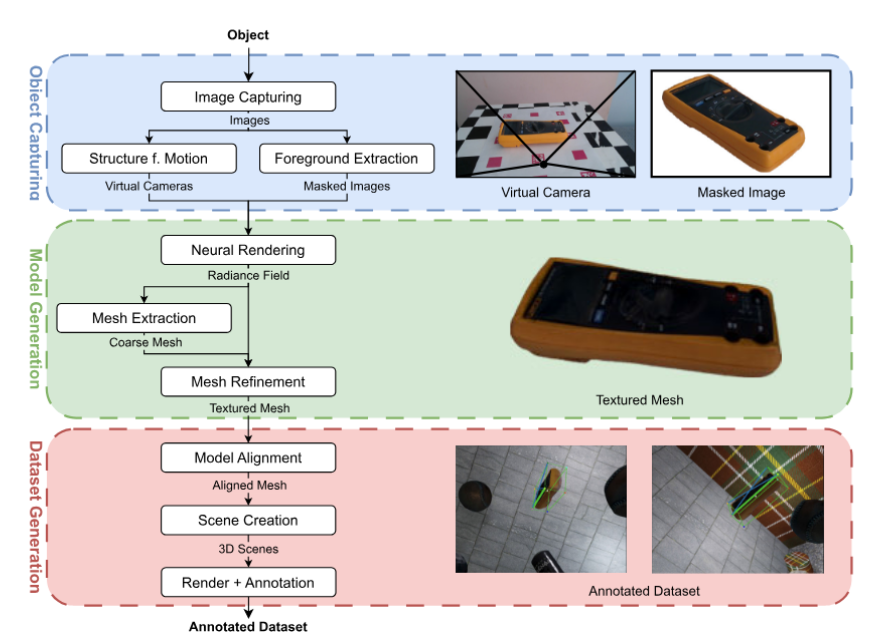

Automated 3D Dataset Generation for Arbitrary Objects

October 10, 2025

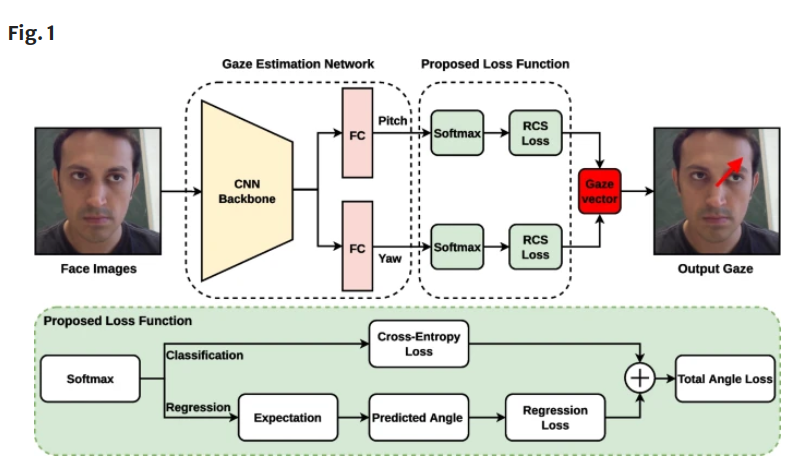

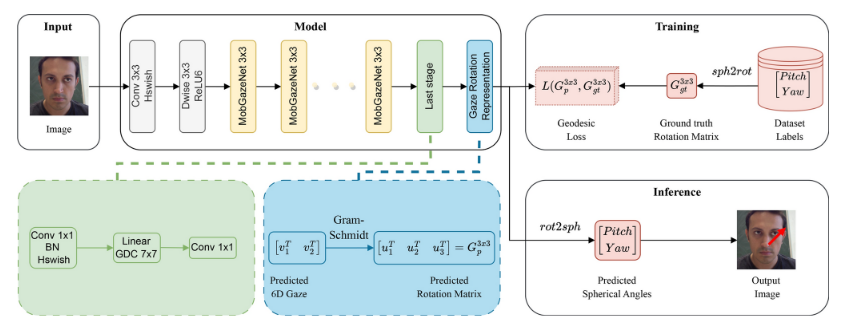

MobGazeNet: Robust Gaze Estimation Mobile Network Based on Progressive Attention Mechanisms

May 09, 2025

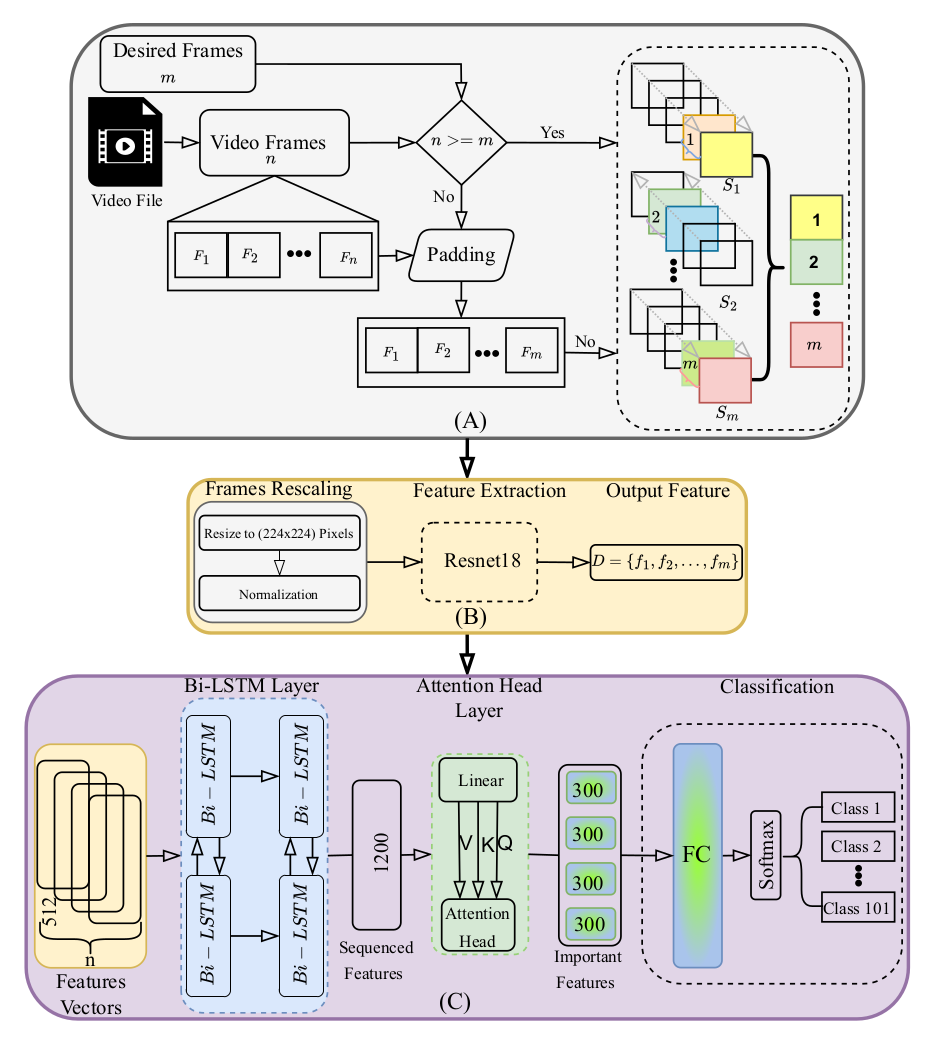

Multi-Head Attention-Based Framework with Residual Network for Human Action Recognition

May 06, 2025

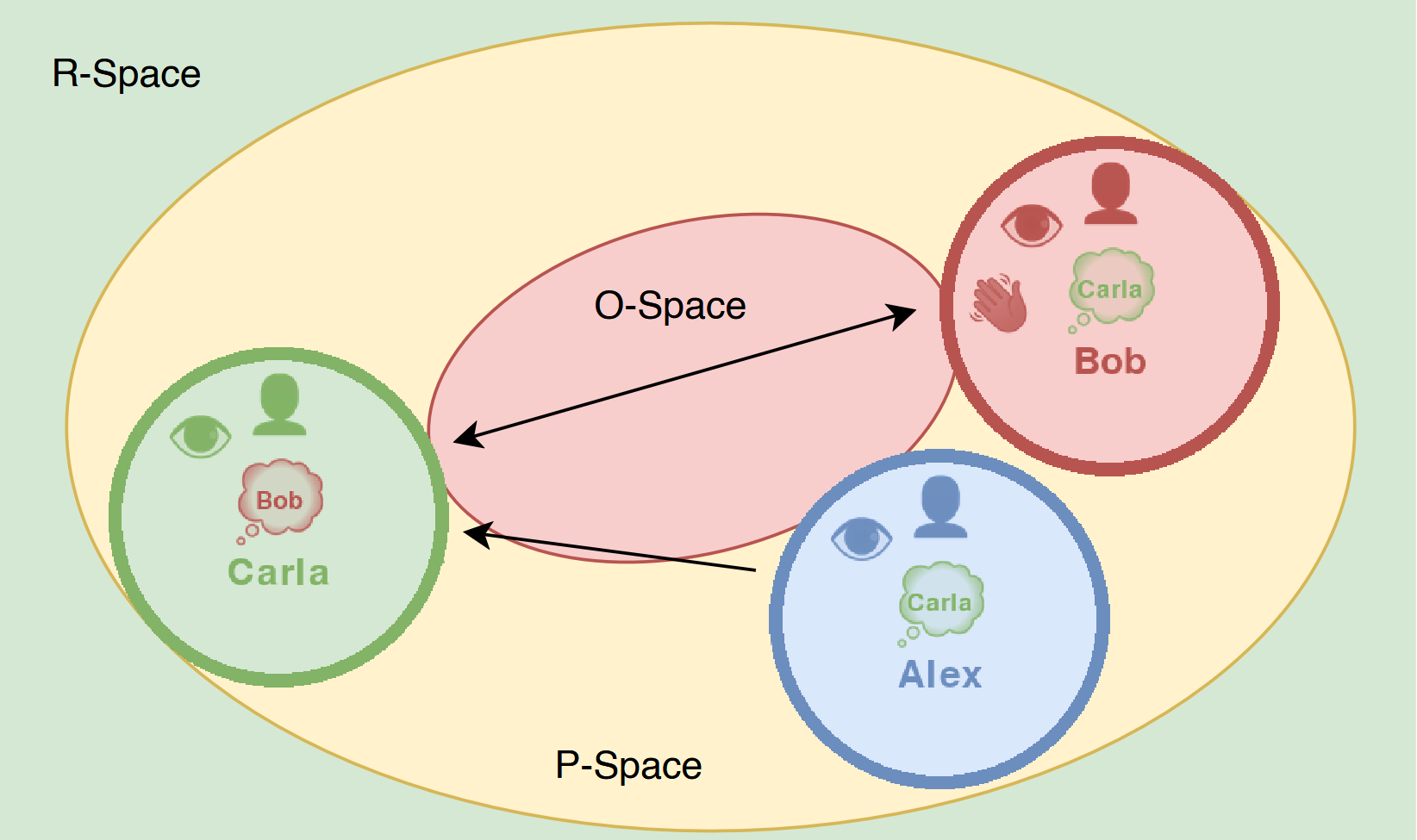

IM HERE – Interaction Model for Human Effort-Based Robot Engagement

April 29, 2025

Automating 3D Dataset Generation with Neural Radiance Fields

April 29, 2025

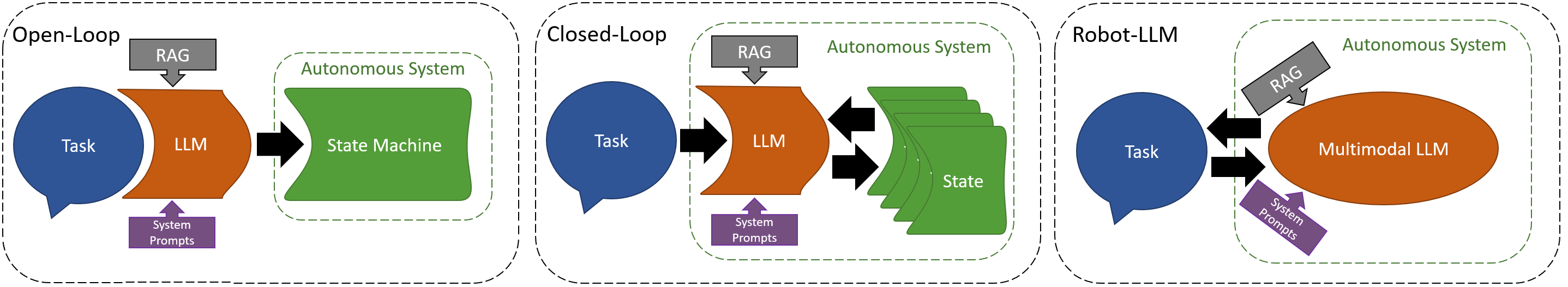

Toward Truly Intelligent Autonomous Systems A Taxonomy of LLM Integration for Everyday Automation

April 28, 2025

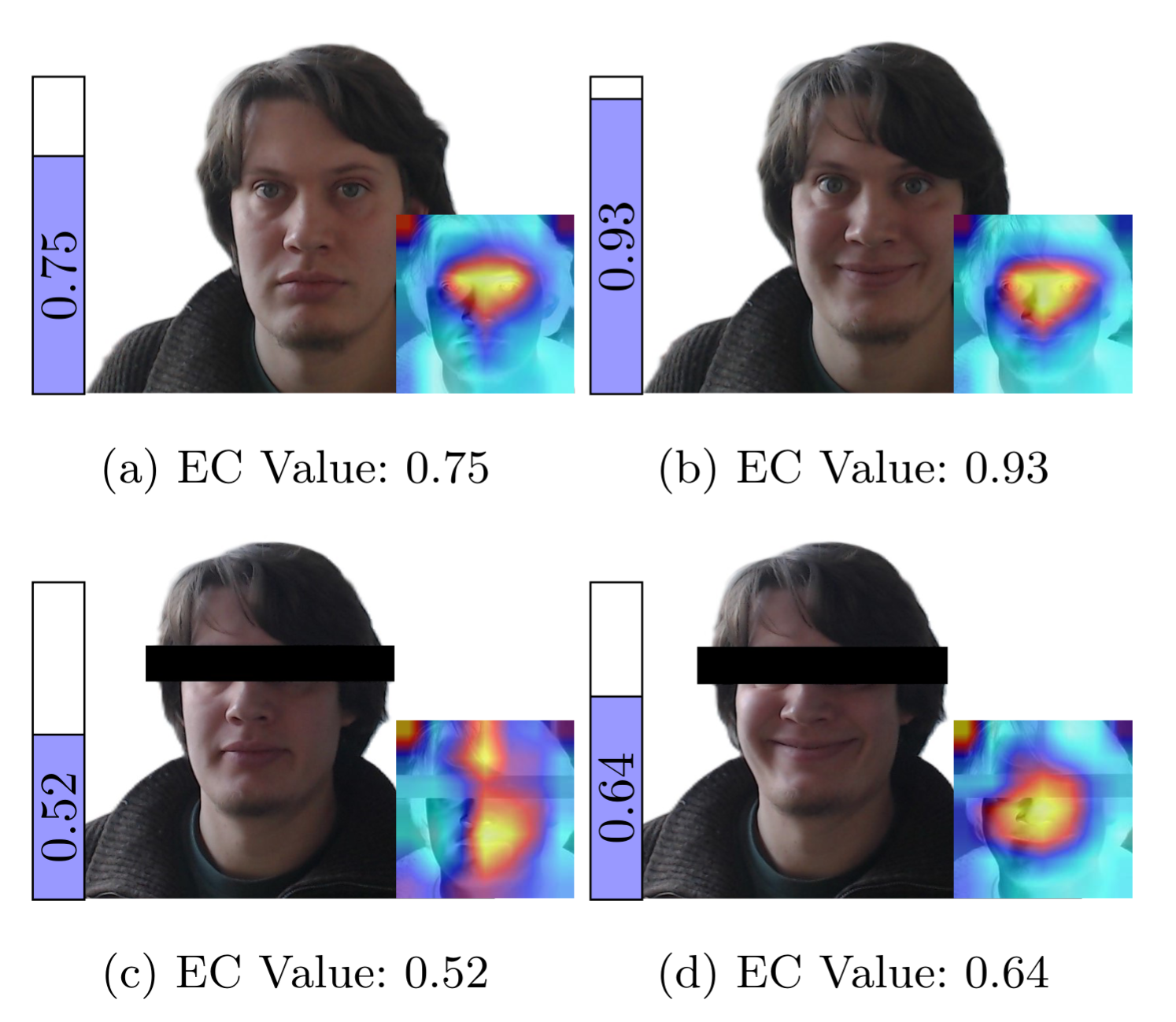

Eye Contact Based Engagement Prediction for Efficient Human-Robot Interaction

April 28, 2025

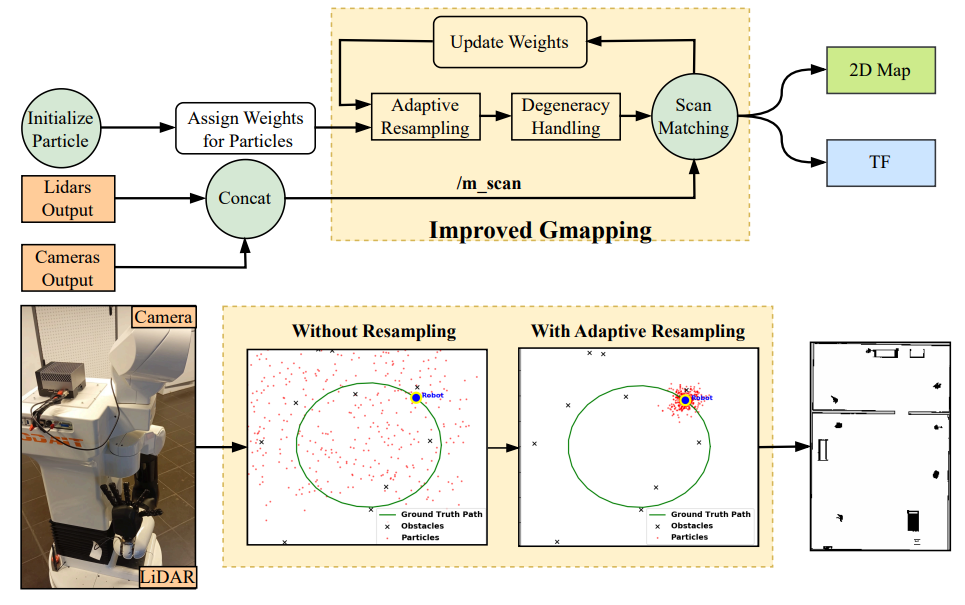

Mobile Robot Navigation with Enhanced 2D Mapping and Multi-Sensor Fusion

April 10, 2025