News

News & blog posts

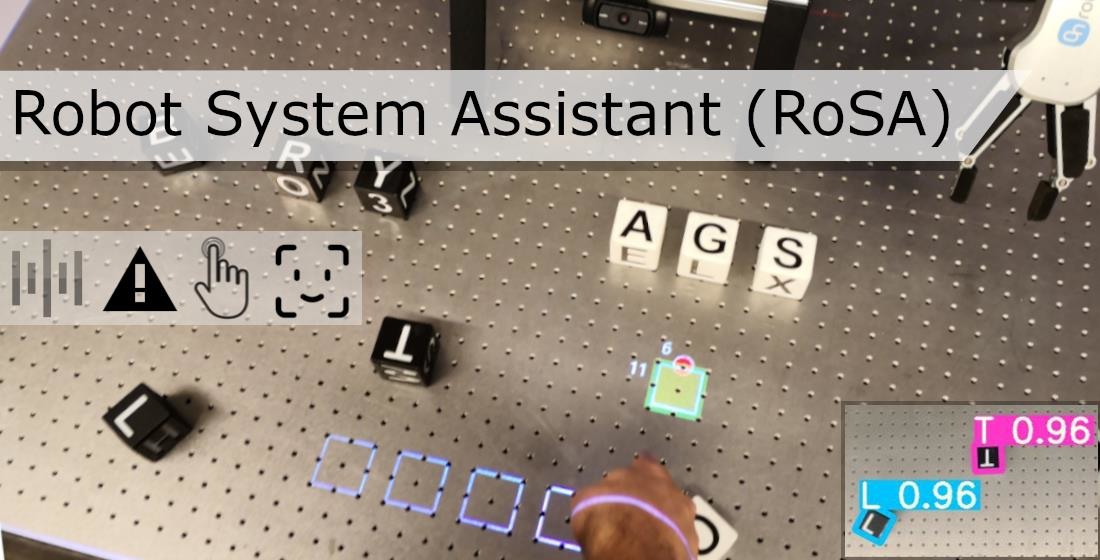

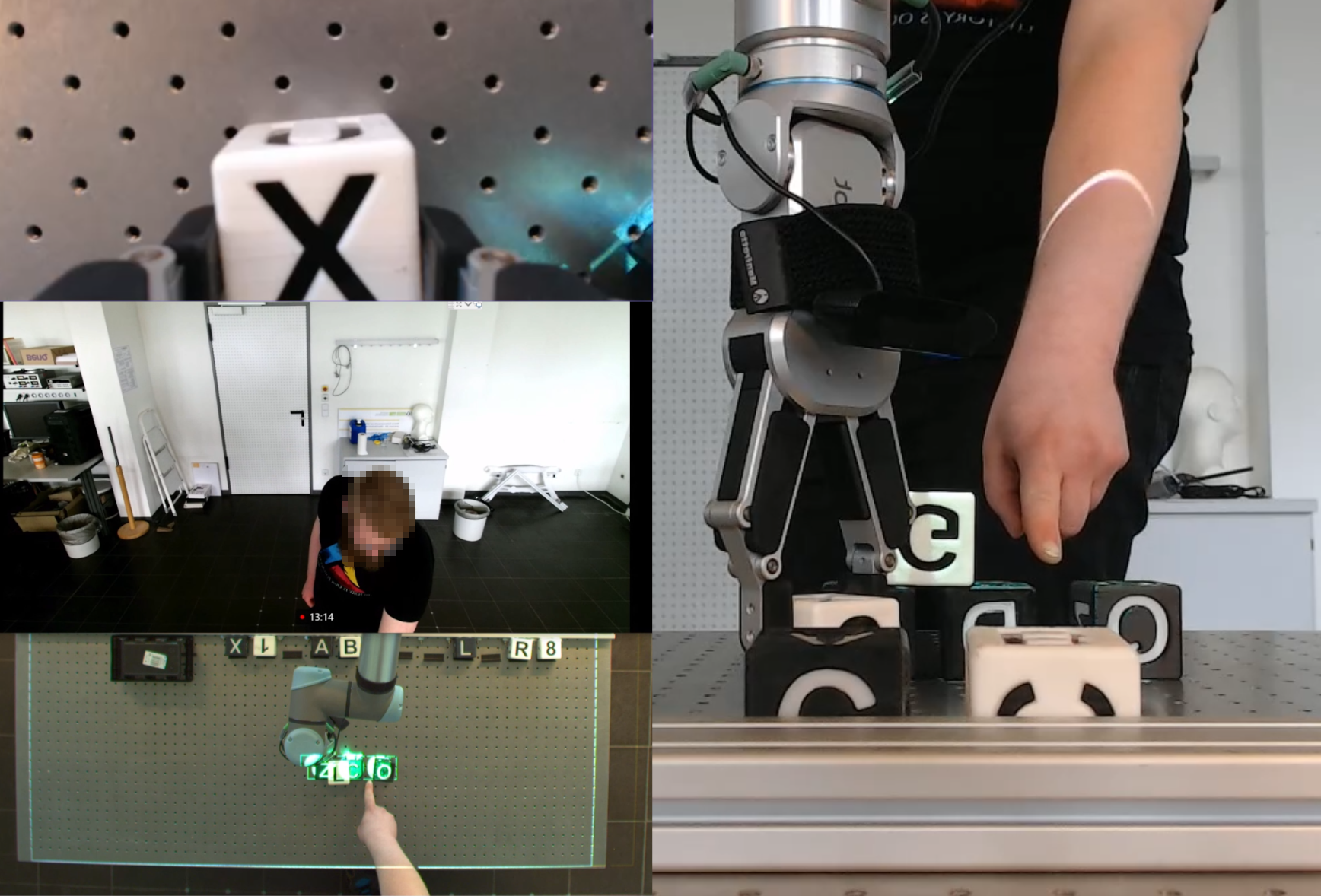

RoSA: Evaluation of Touch and Speech Input Modalities for On-Site HRI and Telerobotics

June 06, 2025

This study evaluates the RoSA framework in hybrid scenarios, comparing touch and speech input for both local and remote human–robot...

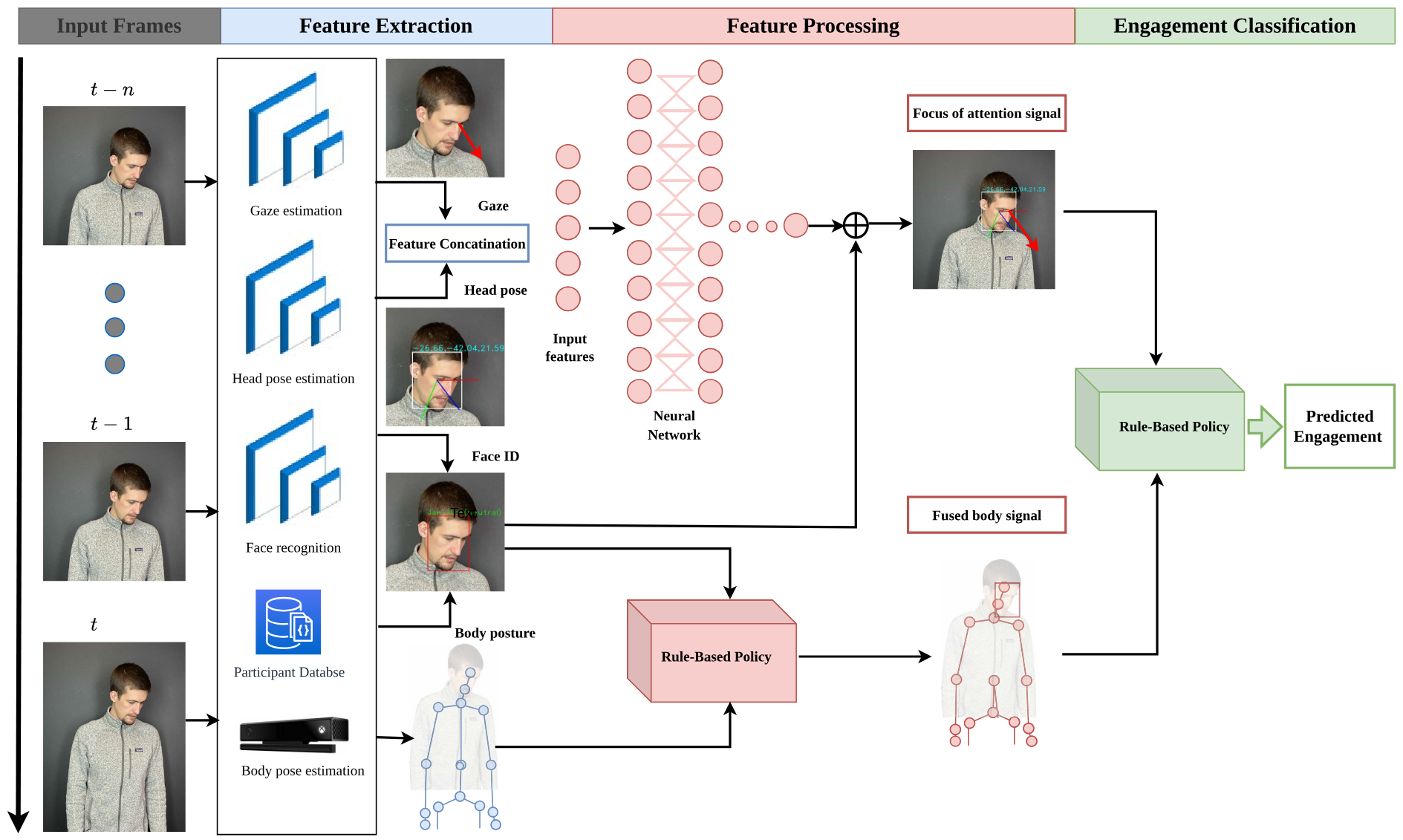

Multimodal Engagement Prediction in Multiperson Human–Robot Interaction

June 13, 2022

A real-time multimodal system for predicting engagement and disengagement in collaborative industrial HRI, using deep learning and rule-based approaches.

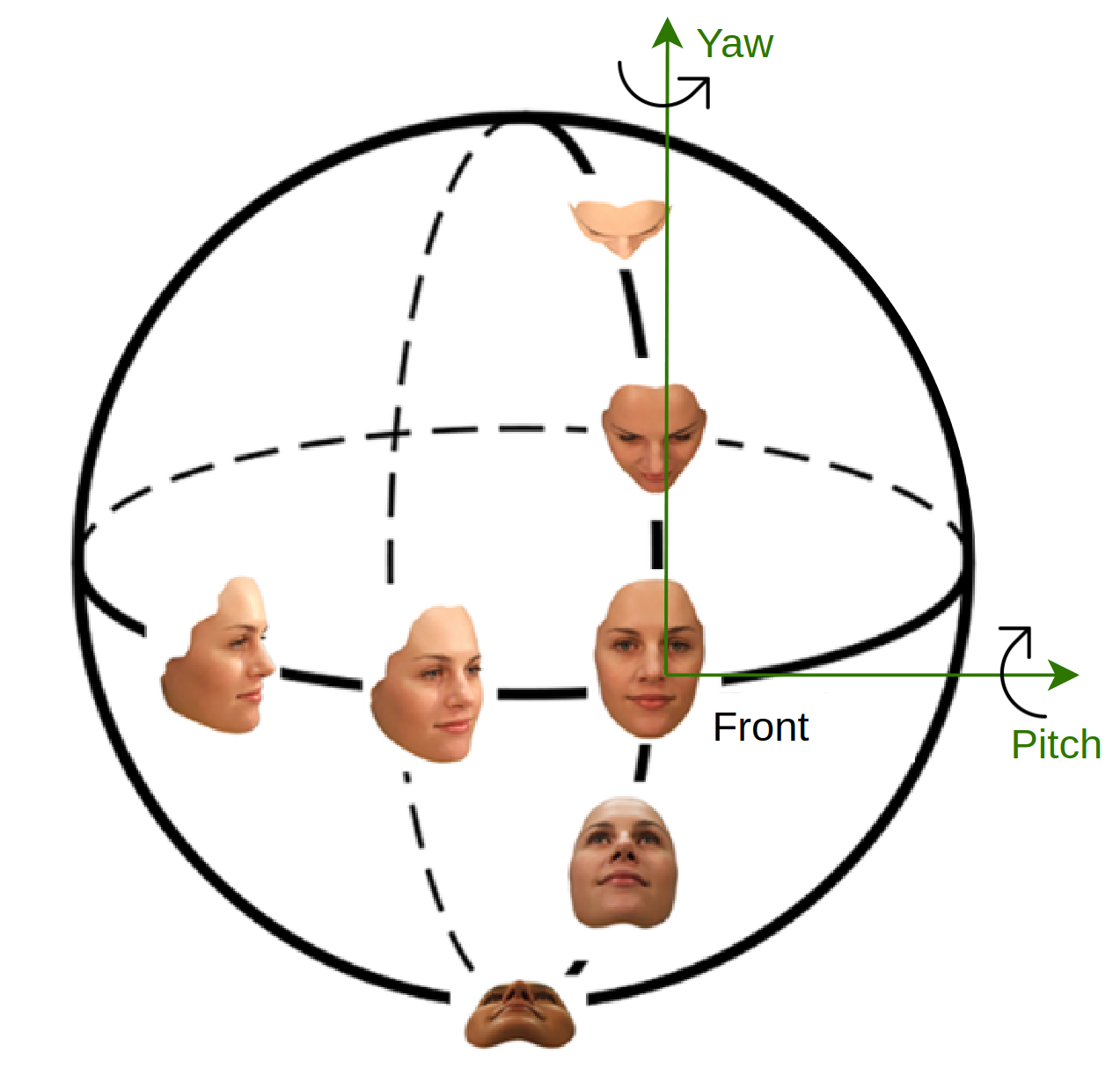

Face Recognition and Tracking Framework for Human–Robot Interaction

May 30, 2022

A real-time face recognition and tracking system for intuitive HRI, integrating lightweight CNNs and ROS.

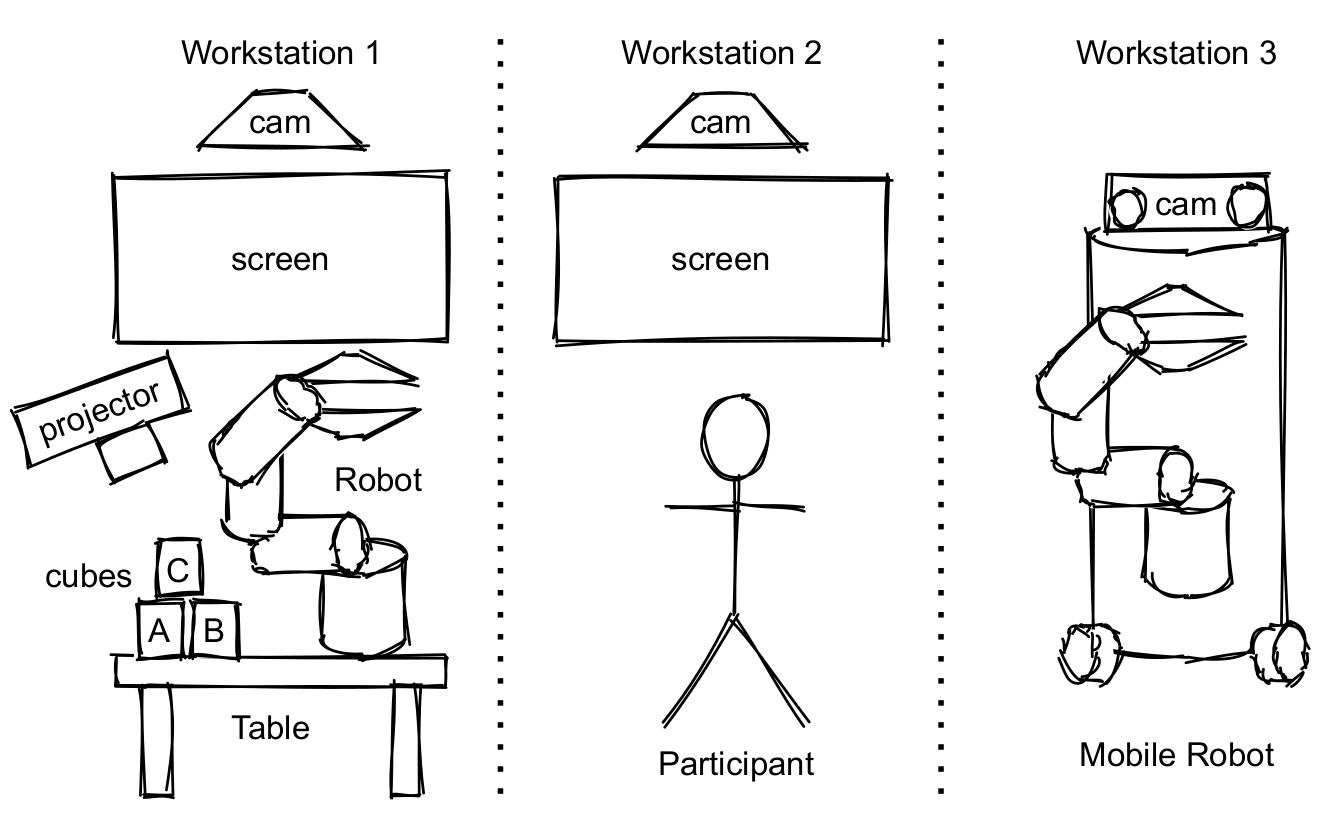

RoSA: Towards Intuitive Multi-Modal and Multi-Device Human–Robot Interaction

January 28, 2022

Building on a prior Wizard-of-Oz study, this paper presents a fully autonomous RoSA system using speech, face, and gesture input...

RoSA: Concept for an Intuitive Multi-Modal and Multi-Device Interaction System

October 27, 2021

Conceptual framework for RoSA — a multi-modal, multi-device human–robot interaction assistant with speech, gesture, and facial recognition.

Robots and Wizards: An Investigation Into Natural Human–Robot Interaction

November 19, 2020

This study explores natural human–robot interaction using a Wizard-of-Oz approach to understand intuitive user behavior in multimodal robot control.